World of Statistical Analysis Programming

Welcome, dear reader, to the fascinating world of statistical programming! Statistical programming is a powerhouse of data analysis, modelling, and reporting across various domains. It’s the bridge between raw numbers and meaningful insights, unlocking the mysteries hidden within data.

But wait, there’s a catch! Coding mistakes in this field can lead to false results. Just like a slight error in a recipe can ruin a cake, even a small misstep in statistical programming can lead to disastrous outcomes. That’s why statisticians and data scientists must exploit the very best practices and tools to ensure accuracy. It’s all about baking the perfect analytical cake.

Table of Contents

Growing Market and Rising Importance of Statistical Analysis Programming

The buzz around statistical programming isn’t just academic. The global statistical analysis software market has soared to an impressive value of US$ 55,640 million in 2022, and it’s forecasted to reach US$ 63,780 million by 2029. That’s a whole lot of number-crunching going on!

And if we dig a little deeper, we find that the overall programming language market was worth a staggering 154.68 billion USD in 2021, with expectations to more than double by 2029, growing at a CAGR of 10.5%. Talk about a thriving field!

What Tools Are We Talking About for Statistical Analysis Programming?

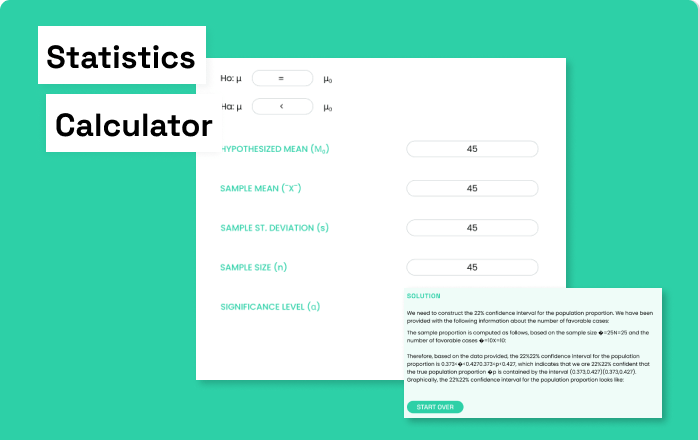

If you’re excited about diving into statistical programming (or maybe you’re already knee-deep in data), here’s a handy table showcasing a comprehensive list of statistical programming software tools you might want to explore:

| Software | Features | Pricing |

|---|---|---|

| Pandas | High-performance computing (HPC) data structures and data analysis tools for Python. | Free and open-source |

| Perl Data Language (PDL) | Scientific computing with Perl. | Free and open-source |

| Ploticus | Software for generating a variety of graphs from raw data. | Free and open-source |

| PSPP | A free software alternative to IBM SPSS Statistics. | Free and open-source |

| R | Free implementation of the S programming language, comprehensive statistical analysis, data visualization, integration with other languages. | Free and open-source |

| Salstat | Menu-driven statistics software. | Free and open-source |

| Scilab | Uses the GPL-compatible CeCILL license, comprehensive statistical analysis, and scientific computing. | Free and open-source |

| SciPy | Python library for scientific computing that contains the stats sub-package, partly based on the UNIXSTAT software. | Free and open-source |

| scikit-learn | Extends SciPy with machine learning models for classification, clustering, regression, etc. | Free and open-source |

| SUDAAN | Add-on to SAS and SPSS for statistical surveys. | Pricing information not available |

| XLfit | Add-on to Microsoft Excel for curve fitting and statistical analysis. | Pricing information not available |

| Stata | Comprehensive statistical analysis, data management, data visualization, reproducible research, automation. | Pricing varies depending on the edition and licensing options. |

| GraphPad Prism | Statistical analysis and graphing software. | Pricing information not available |

| SAS | Comprehensive statistical analysis, advanced modeling and reporting capabilities. | Pricing information not available |

| IBM SPSS Statistics | Comprehensive statistical analysis, advanced modeling and reporting capabilities, data visualization, automation, customization. | Pricing varies depending on the edition and licensing options. |

| MATLAB | Compatibility with Excel files, good graphics, integration with high-end programming software like Python and C++. | Pricing information not available |

| JMP | Compatibility with various file formats, including Excel, IBM SPSS, Stata, and JMP. | Pricing information not available |

| Minitab | Comprehensive statistical analysis, data visualization, data manipulation, quality assurance, and process improvement. | Pricing varies depending on the edition and licensing options. |

Statistical Analysis Programming

With the growing demand and endless possibilities, statistical programming is more relevant than ever. From data FAIRness to stakeholder relationships, every aspect matters. Best practices in statistical computing mean quality assurance at every step[1].

So what are the common mistakes to avoid? What are the golden rules to follow? Stick around, and let’s find out together!

Arbitrary naming of variables

The Problem with Non-Descriptive Naming

When we choose arbitrary or non-descriptive names for variables, we’re setting ourselves up for confusion and difficulty later on. Imagine trying to figure out what m1 is supposed to mean when you come back to a project after a few weeks. It’s like trying to read someone’s mind!

Examples in R:

- Good:

mean_treatment <- 5.6explains itself. Bad:m1 <- 5.6doesn’t tell us anything. - Good:

total_sales <- 1500gives a clear picture. Bad:ts <- 1500can be confusing.

How Proper Naming Conventions Enhance Readability for Statistical Analysis Programming

Proper naming conventions are like a helpful guide, pointing you in the right direction. They help you understand what a variable is for without having to trace back through the code.

Examples in Python:

- Good:

average_age = 30makes sense. Bad:aa = 30might leave you scratching your head. - Good:

employee_count = 10is descriptive. Bad:ec = 10could mean anything.

Example of Good vs. Bad Naming

Having meaningful names can make your code a joy to read and maintain. Here’s how good and bad naming can look side by side:

Examples in R:

- Good:

student_grades <- c(90, 80, 70)Bad:sg <- c(90, 80, 70) - Good:

temperature_record <- c(25, 30, 27)Bad:tr <- c(25, 30, 27)

Examples in Python:

- Good:

price_list = [100, 200, 150]Bad:pl = [100, 200, 150] - Good:

current_weather = 'sunny'Bad:cw = 'sunny' - Good:

user_preferences = {'color': 'blue', 'size': 'medium'}Bad:up = {'color': 'blue', 'size': 'medium'}

Conclusion

Arbitrary naming of variables is like throwing a wrench into the gears of collaboration, maintainability, debugging, and documentation. It’s important to make code as readable as possible, so be mindful of naming conventions. After all, good naming is an art that can make coding a smooth and enjoyable experience!

By following proper naming conventions, we ensure that our code is understandable by everyone on the team, making our lives, and the lives of our fellow developers, a whole lot easier. Happy coding!

Using data types inefficiently

Issues with Using Numerical Types Instead of Factors

Sometimes, we might feel tempted to code categorical variables like treatment groups as numerical types. However, this approach can lead to misunderstandings and errors. In R, factors are designed to handle categorical levels like treatment and control, and using numerical types for this purpose can be like fitting a square peg in a round hole!

Examples in R:

- Wrong:

treatment <- c(1, 2, 1, 2)where1and2represent treatment and control. Right:treatment <- factor(c("treatment", "control", "treatment", "control")) - Wrong:

gender <- c(0, 1)for male and female. Right:gender <- factor(c("male", "female"))

How R Can Efficiently Handle Categorical Levels

R has a neat solution for handling categorical levels: factors! Using factors in R can make our code more efficient and our data analysis more insightful. It’s like giving names to our categories instead of confusing numbers.

Examples in R:

- Efficient:

day_of_week <- factor(c("Mon", "Tue", "Wed"))gives clear understanding. Inefficient:day_of_week <- c(1, 2, 3)can be ambiguous. - Efficient:

status <- factor(c("active", "inactive"))clearly defines the categories. Inefficient:status <- c(0, 1)lacks context.

Practical Examples

Here’s how coding categorical variables as numerical types might look, and how we can fix it:

Examples in Python:

- Wrong:

grades = [0, 1, 1, 0]where0and1represent fail and pass. Right:grades = ['fail', 'pass', 'pass', 'fail'] - Wrong:

cities = [1, 2, 3]for New York, Los Angeles, and Chicago. Right:cities = ['New York', 'Los Angeles', 'Chicago'] - Wrong:

sizes = [0, 1, 2]for small, medium, and large. Right:sizes = ['small', 'medium', 'large']

Conclusion

The proper and efficient use of data types is not just a coding nicety; it’s a fundamental practice that has profound impacts on data quality, operational efficiency, and even the financial bottom line of organizations.

Consider the economic impact of these practices. Poor data quality costs organizations an alarming average of $12.9 million per year, according to Gartner’s estimation in 2021. IBM took it further by calculating the annual cost of data quality issues in the U.S., amounting to a jaw-dropping $3.1 trillion in 2016. These figures aren’t just abstract numbers; they translate to real lost sales opportunities, additional expenses, and potential regulatory fines.

The inefficiency in using data types, such as coding categorical variables as numerical ones, may seem trivial at first glance, but it contributes to a significant portion of these costs. Correcting such data errors and handling the resulting business problems can devour 15% to 25% of a company’s annual revenue on average. It’s like throwing money down the drain!

Writing messy code

We’ve all been there—tight deadlines, late nights, and the temptation to cut corners in our code. But messy code is like a stain on a favourite shirt: it sticks out and can cause all sorts of trouble. Let’s break down why we should avoid it and look at ways to keep our code clean and tidy.

Importance of White Space and Tabbing

White space and tabbing are like the breadcrumbs in coding. They guide us through the code, making it easier to follow and understand. Think of white space as a visual cue that helps you keep track of where you are. For example, consider the difference between these two Python code snippets:

Messy:

def calculate_total(x,y):total=x+y;return total

Clean:

def calculate_total(x, y):

total = x + y

return total

In R, it’s the same story: Messy:

calculateTotal <- function(x,y){total=x+y;return(total)}

Clean:

calculateTotal <- function(x, y) {

total = x + y

return(total)

}

The clean versions are much easier to read, right?

Dangers of Duplicated Code

Repeating code is like telling the same joke over and over; it loses its impact. Not to mention, it’s harder to maintain. If a bug sneaks into that duplicated code, you’ll have to fix it in every single copy! Here’s a comparison in Python:

Duplicated:

def multiply_numbers(x, y):

return x * y

def multiply_values(a, b):

return a * b

Functional:

def multiply_numbers(x, y):

return x * y

And in R:

Duplicated:

multiplyNumbers <- function(x, y) { return(x * y) }

multiplyValues <- function(a, b) { return(a * b) }

Functional:

multiplyNumbers <- function(x, y) {

return(x * y)

}

Why repeat yourself when you can be more efficient?

Encouraging the Use of Functions

Functions are like the secret sauce in your coding recipe. They help you reuse code and make it modular, testable, and maintainable. Instead of writing the same code multiple times, you can write it once in a function and then call it as needed.

Python Example:

def greet(name):

return f"Hello, {name}!"

print(greet("Alice"))

print(greet("Bob"))

R Example:

greet <- function(name) {

paste("Hello,", name, "!")

}

print(greet("Alice"))

print(greet("Bob"))

See how neat and tidy that is?

Conclusion: Don’t Let Messy Code Mess Up Your Day

Writing messy code might seem like a minor issue, but the consequences can be far-reaching. It affects not just readability and maintainability, but can lead to serious business losses. From the Ariane 5 rocket explosion that cost nearly $8 billion to the 17.3 hours developers spend each week dealing with bad code, the impact is real and significant.

So next time you’re tempted to ignore those tabbing rules or duplicate some code, remember the bigger picture. Think about your colleagues, your future you, and even your company’s bottom line. Give your code the love and attention it deserves. After all, clean code is happy code, and happy code makes for happy developers. Let’s keep it clean, friends!

Hard Coding

Hard Coding: Taking the Easy Route?

Ah, hard coding—often a coder’s guilty secret. We’ve all done it at some point, right? But as tempting as it may be to plug in those numbers directly, it can lead to a tangled web of problems down the line. Let’s explore why and see what we can do instead.

Explanation of Hard Coding vs. Using Constants

Imagine you’re painting a mural, and instead of mixing colors from primary shades, you buy a new can for every hue you need. Sure, it works, but it’s neither efficient nor flexible. Hard coding is similar. It’s embedding specific values directly into the code, like radius = 10 in your program.

Now, consider using constants. They’re like your primary colors, defined once and used throughout the code. For example, RADIUS = 10. Suddenly, you have a standard that’s easy to manage and adjust. 🎨

Here’s a comparison in Python:

Hard Coding:

def calculate_area():

return 3.14 * 10 * 10

Using Constants:

PI = 3.14

RADIUS = 10

def calculate_area():

return PI * RADIUS * RADIUS

And in R:

Hard Coding:

calculateArea <- function() {

return(3.14 * 10 * 10)

}

Using Constants:

PI <- 3.14

RADIUS <- 10

calculateArea <- function() {

return(PI * RADIUS * RADIUS)

}

See how clean and logical the constant approach is?

Why Constants are Preferred

So why are constants such a big deal? Let’s break it down:

- Flexibility: Constants allow you to make changes in one place, rather than hunting down every instance of a hard-coded value. Imagine changing the radius in hundreds of places versus just once!

- Maintainability: Constants make your code more readable, making it easier for you or your team to understand and maintain the code later on. Nobody likes digging through spaghetti code to find that one value.

- Reusability: By using constants, you make your code more reusable in other parts of your project or even in other projects. It’s like having a universal remote for all your devices.

- Scalability: Constants help your code grow gracefully. You can scale up without the headaches of tracking down hard-coded values.

- Security: If you hard code sensitive information, you’re leaving your secrets out in the open. Constants can help you manage those values securely.

Conclusion: Choose Wisely, Code Wisely

Hard coding might seem like a shortcut, but it’s a path brimming with obstacles. Lack of flexibility, reduced maintainability, limited reusability, and even security risks—it’s a minefield that can turn a promising project into a maintenance nightmare.

But there’s a hero in this story: constants. They give us a way to write code that’s logical, clean, and efficient. It’s like having a well-organized toolbox where everything has its place.

So next time you’re tempted to hard code a value, think about the future you, the one who has to maintain that code. Use constants like SAMPLE_SIZE = 120 or NR_IMPUTATIONS = 20, and let your code shine with clarity and elegance.

Remember, coding isn’t just about solving problems; it’s about creating solutions that last. Let’s make our code a masterpiece, not a puzzle.

Manual Embedding of Results and Figures

Manual Embedding of Results and Figures: A Thing of the Past?

We live in an era where data rules the world, and we often find ourselves in the middle of numbers, tables, graphs, and complex calculations. But creating reports filled with this data manually? That’s so last century!

Enter computational notebooks and dynamic reporting, turning the arduous process into a streamlined and interactive experience. Let’s take a journey through this innovative way of working and see how it can revolutionize the way we present data.

The Benefits of Computational Notebooks

Computational notebooks are like a playground for data scientists and analysts. They allow you to mix code, computations, explanatory text, tables, and figures all in one place. Imagine cooking your favorite dish and having all the ingredients ready at your fingertips. Sounds convenient, right? Here’s why they rock:

- Interactivity: You can run code snippets, see the results instantly, and make changes on the fly. It’s like having a conversation with your data.

- Collaboration: Share your insights, methodologies, and findings with your team in a way that’s clear and replicable. It’s teamwork made easy!

- Versatility: Embed various elements, from plain text to intricate plots, creating a comprehensive and visually appealing report. Think of it as a painting with data.

How Dynamic Reporting Simplifies the Process

Dynamic reporting takes things up a notch. It’s about creating reports where changing the data automatically updates the report. No more manual labour, just pure efficiency. Here’s how it unfolds:

- In R, you can pair up with LaTeX, using

.Rnwfiles and create magical documents with R Markdown. The knitr package brings everything together, letting you generate reports in HTML, Word, RTF, and PDF formats. - In Python, tools like ReportBro, Plotly, and Pandas enable you to generate dynamic reports. Want interactive data visualizations? Plotly’s got you covered. Need various formats like PDF, Excel, and HTML? Say hi to ReportBro!

Tools and Examples: The Dynamic Duo

Both R and Python offer incredible tools for dynamic reporting:

R and LaTeX:

- Use

.Rnwfiles to embed R code chunks within LaTeX markup. - Utilize R Markdown for eye-catching reports in different formats.

- The Knitr package blends everything for a smooth report-generation experience.

Python’s Power Trio:

- ReportBro: Design your report templates with ease and export in multiple formats.

- Plotly: Dive into interactive data visualizations, even for specialized data like financials and cryptocurrencies.

- Pandas: Manipulate and analyze data to create detailed and visually stunning reports.

Wrapping Up: Welcome to the Future of Reporting

Manual embedding of results and figures may feel nostalgic, but why stick to the past when the future is so bright? Computational notebooks and dynamic reporting are not just trends; they’re transforming the way we handle data.

With the right tools, creating engaging and informative reports becomes an enjoyable and efficient task. Whether you’re a Pythonista or an R lover, the world of dynamic reporting awaits you with open arms.

So why not explore these tools and create something amazing? After all, data is more than numbers; it’s a story waiting to be told. Let your creativity flow and turn those dull tables and charts into a masterpiece.

Inefficient Use of Packages

Inefficient Use of Packages: Finding the Sweet Spot

We’ve all heard the saying, “Too much of a good thing can be bad.” When it comes to programming, this adage rings true, especially with the use of packages. It’s like being at a buffet; you want to enjoy the variety without overloading your plate.

Balancing Efficiency with Complexity: The Art of Juggling

Including packages in statistical programming can make your life easier and your code safer. It’s delegating responsibility, like handing off some heavy lifting to a friend. But like a juggler with too many balls, including too many packages can lead to complexity and inefficiency. Let’s break it down:

- Efficiency: Using packages saves time and effort. It’s like having a toolbox filled with specialized tools that do the job for you.

- Complexity: More packages mean more dependencies. And with more dependencies, you might find yourself tangled in a web of compatibility and maintenance issues.

Unnecessary Dependencies: The Roadblocks to Reproducibility

In the world of programming, reproducibility is key. It’s about making sure that your code works the same way every time, on every system. But too many packages can be like roadblocks on the path to reproducibility:

- Slow Down: More packages can slow down your code, like a traffic jam slowing down your drive home.

- Maintenance: The more packages you include, the more you have to maintain. It’s like having a garden filled with plants; they all need watering, but some might just be weeds.

Best Practices for Including Packages: The Golden Rules

So how do you strike the right balance? Here are some golden rules to live by:

- Keep it Minimal: Only include the packages that you really need. It’s like packing for a trip; you wouldn’t take your whole wardrobe, would you?

- Embrace Vectorized Operations: They’re faster than loops and can significantly speed up your code. Think of it as taking the express train instead of the local.

- Avoid Unnecessary Copying: Don’t duplicate data when you can reference the original. It’s like having a map instead of drawing your own every time you go somewhere.

- Profile Your Code: Identify bottlenecks and areas for optimization. It’s like a health check-up for your code, pinpointing where it needs a boost.

- Choose the Right Package: Different packages have different strengths. Pick the one that fits your task and audience like a well-tailored suit.

Wrapping Up: The Recipe for Success

Including packages in your code is both an art and a science. You want the efficiency without the unnecessary complexity. You’re aiming for a smooth ride without unnecessary roadblocks.

By following the best practices, you’re not just writing code; you’re crafting a masterpiece that’s efficient, maintainable, and reproducible.

So next time you find yourself reaching for a new package, think about whether you really need it. Make sure it adds value, not complexity. After all, in programming, as in life, sometimes less is more.

Losing Old Versions of Code

Losing Old Versions of Code: Why It’s Like Losing Family Photos

Imagine your family photo albums being lost forever. Those priceless memories gone, never to be seen again. Losing old versions of code is somewhat similar to losing those precious photos, but with implications that might cost millions.

Importance of Version Control Tools like Git: The Time Machines of Code

Version control is like a time machine for your code. It allows you to go back to previous versions, explore different paths, and even recover from mistakes. It’s a lifeline in the ever-changing landscape of technology. Here’s why:

- Bug Fixes: Ever dealt with a pesky bug? Old versions might hold the solution. Lose the code, and you’re left scratching your head.

- Compatibility: With technology moving fast, older code might clash with newer systems. If you lose the old version, adjusting to the new might become a Herculean task.

- Training: Imagine teaching everyone to use a new version overnight! If the old version’s gone, you’re facing a steep learning curve.

- Rollback: Sometimes, you need to go back to go forward. Without old versions, rolling back is like trying to unscramble an egg.

- Security: Old code may have weak spots that newer versions have fortified. Lose the old code, and those vulnerabilities could haunt you.

Now, if these scenarios feel intimidating, there’s hope in the form of tools like Git. According to the tech community, 87.2% of developers swear by Git for version control. They’re onto something!

Facilitating Collaboration: The Symphony of Teamwork

Version control isn’t just about saving past versions. It’s also about facilitating collaboration. Like musicians in an orchestra, developers can work together in harmony:

- Branching and Merging: Multiple team members working on the same code? Version control allows them to work on different branches and merge seamlessly.

- Conflict Resolution: When conflicts arise, version control provides tools to resolve them. It’s like having a mediator at a debate.

- Tracking Changes: Ever wondered who made a change or why? With version control, every alteration is documented. It’s the detective’s log of code.

Platforms like GitHub and GitLab: The Command Centers

Enter the platforms that make all of this possible. GitHub and GitLab are like the command centres, providing:

- Repositories: Safe storage for all your code versions. Like a vault for your digital treasures.

- Collaboration Tools: A playground for teamwork, where code is shared, reviewed, and improved.

- Integration: A hub connecting various tools, from development to deployment. A Swiss Army knife for developers!

Holding On to the Past to Shape the Future: A Balancing Act

Losing old versions of code can be catastrophic, with the average cost of a data breach being $3.86 million. Version control tools like Git, along with platforms like GitHub and GitLab, are the safeguards, enabling businesses to fix bugs, ensure compatibility, train staff, rollback changes, and fortify security.

In this world where code evolves rapidly, holding on to the past is not an act of nostalgia; it’s a strategy to shape the future. Embrace version control, celebrate collaboration, and never lose sight of where you’ve come from. After all, those old versions are not just code; they’re the stepping stones of innovation.

No Code Testing

No Testing in Code: Like Walking a Tightrope Without a Safety Net

Testing code may not have the thrill of a circus act, but skipping it is like walking a tightrope without a safety net. Why would you want to take that risk? Let’s dive into the world of testing in code and understand why it’s so vital.

The Role of Testing in Ensuring Code Quality: Aiming for Perfection

Testing conditions with logical expressions, like using functions such as assert(), is like having a sharpshooter on your coding team. It helps you hit the mark every time.

- Confidence Boost: Knowing that your code is working as expected adds a layer of confidence. It’s like a security blanket for developers.

- Debugging Tool: Found an error? Testing functions can help you pinpoint it like a GPS for code mistakes.

- Why Test Obvious Things: Ever tripped over an obvious obstacle? Testing even the obvious ensures that you don’t stumble over hidden surprises in your code.

Package developers often use unit tests, automated tests that ensure the expected behavior of code. Think of them as quality control inspectors on a production line.

When No Testing Goes Wrong: A Slippery Slope

Ignoring tests in statistical programming is like ignoring a leaking faucet; it might not seem significant, but the consequences can be staggering.

- Coding Mistakes Lead to False Results: Like a small typo turning a love letter into a breakup note, a small mistake in code can deliver the wrong message, sometimes with serious consequences.

- Statistical Software Engineering Challenges: With so many variables at play, such as small sample sizes and uncontrolled factors, not testing is like trying to bake without measuring the ingredients.

- Poorly-Designed, Untestable Code: Unchecked code can become like tangled Christmas lights, complicated and nearly impossible to untangle.

- Global State Challenges: Ever tried to read someone else’s notes? The global state can make code comprehension as confusing, leading to testing nightmares.

- Excel Errors Leading to Wrong Results: A famous case in statistics, where an Excel error led to substantially wrong results, shows how testing isn’t just for traditional code but also for spreadsheets!

Benefits and Business Implications: Why Testing Isn’t Optional

Here’s where testing transcends code and touches business:

- Catch Errors Early with TDD: Test-driven development (TDD) is like having a safety rehearsal before the big show. It catches errors early and enhances the quality, leading to a star performance.

- Avoid Financial Pitfalls: From costly bug fixing to substantial financial penalties, the lack of testing is like a road filled with financial potholes.

- Attract and Retain Talent: Want to win the talent race? Quality code and testing practices act as magnets for top software engineers.

- Boost Productivity: Streamlining workflows with proper testing is like oiling a machine; everything runs smoother and faster, leading to better revenue and opportunities.

Don’t Gamble with Your Code: Test, Validate, Thrive

In the end, not testing your code is not an option. It’s a gamble that could cost time, money, and reputation. So embrace the safety net of testing. Utilize functions like assert(), practice unit testing, and don’t skip on testing even the obvious.

Remember, code without tests is a mystery novel without the last chapter. You’ll never know what twists and turns lie ahead until it’s too late. So don’t walk the tightrope without a net; test your code and walk confidently towards success.

No Code Review

No Code Review: A Risky Game of Coding Roulette

Programming can sometimes feel like a game. But when it comes to code review, playing games can lead to chaos. Let’s explore what happens when coding goes solo and code review gets sidelined. Buckle up; it’s a rollercoaster ride!

Pair Programming: Two Minds are Better Than One

Picture this: Two people, one computer, and a coding task. This isn’t a riddle; it’s pair programming!

In pair programming, one person is the code master, while the other observes, ready to swoop in with suggestions like a coding superhero.

Not only does this generate peer-reviewed code, but it’s like a classroom where knowledge is shared, questions are answered, and learning flows like a two-way street.

The Beauty of Peer-Reviewed Code: Many Eyes Make Bugs Shy

Having a second pair of eyes on your code is like having a cooking buddy in the kitchen. They catch the salt before you accidentally add sugar to the stew.

- Catching Bugs Early: Finding a bug during coding is like finding a needle in a haystack. But find it during review, and you’ve saved a bundle of time and money.

- Uniform Design: Code review ensures that the code fits together like pieces of a puzzle. Every part aligned means a beautiful picture at the end.

- Learning Experience: Ever wish for a mentor in your pocket? Code review is like having a knowledgeable friend always there to guide you and answer your questions.

When No Review Goes Wrong: A Coding Horror Story

Skipping code review is like skipping breakfast; it might seem like a time-saver, but the long-term consequences aren’t worth it.

- Errors Sneaking In: Without review, errors creep into the code like uninvited guests at a party.

- Decreased Productivity: Ironically, avoiding code review to save time can slow down productivity like a traffic jam on the coding highway.

- Missed Goals and Deadlines: Imagine missing your flight because you stopped for a coffee. Without code review, you risk missing crucial releases or business goals.

- Inconsistencies in Codebase: A codebase without review can become a messy room where nothing matches, leading to confusion and chaos down the line.

- Procrastination and Missed Feedback: Lack of clear guidelines can turn code review into a task that’s always pushed to “later.” The result? Missed opportunities for growth and improvement.

The Hidden Costs: Penny Wise, Pound Foolish?

Skipping code review might seem like a cost-saver, but it can be a financial black hole.

- Expensive Bug Fixes: Like paying for a luxury hotel when you could have booked early, fixing bugs later can cost up to 10 times more.

- Hidden Expenses: Time spent on reviewing and addressing feedback can add up, turning a free task into a costly one.

- The Cost of Outside Help: Need a freelancer for code review? Hold onto your wallet; it can range from $200-$300 per hour.

Code Review: Not a Luxury, but a Necessity

Playing fast and loose with code review is like playing with fire. You might not get burned right away, but the risks are high.

Embracing code review and pair programming is like investing in a safety net for your code. It catches mistakes, fosters learning, and ensures that your code doesn’t just function, but thrives.

So, don’t gamble with your code’s quality. Treat code review not as an option but as a VIP guest in your development process. Because in the coding world, two heads are often better than one.

Conclusion: Coding Mastery and the Road Ahead

We’ve taken a deep and analytical look at several facets of coding, from testing to code review. Now, it’s time to synthesize these insights and leave you with some final thoughts that can guide your coding practice.

Key Takeaways: The Core of Coding Excellence

- Testing is Essential: Ignoring testing can lead to major setbacks, so use those assert() functions and unit tests to keep your code’s quality high.

- Peer Review and Collaboration: Through practices like pair programming, you not only produce better code but foster a rich learning environment.

- Version Control Matters: It’s more than just a backup system; version control is the heartbeat of successful development projects. It allows for collaboration, tracking changes, and can be a lifesaver when something goes wrong. It’s your coding time machine, enabling you to move back and forth through your code’s history.

- Avoiding Common Mistakes: All the areas we’ve discussed, if neglected, can lead to costly errors. Don’t let that happen!

Why These Lessons Matter: Building Success

- Long-term Impact: Every coding decision has long-term consequences. Testing, code review, and version control are investments in your code’s future. Think of them as insurance policies for success.

- Professional Growth: Embracing these practices doesn’t just make you a better coder; it makes you a more thoughtful and adaptable professional. It’s about crafting not just code, but a career.

- Business Implications: From financial loss to reputation damage, the costs of neglecting these practices can be immense. Protect yourself and your organization by making these practices second nature.

Next Steps: Your Coding Adventure

- Apply What You’ve Learned: These aren’t just theoretical lessons; they’re practical tools for your coding toolkit.

- Share the Knowledge: Help others on their coding journey by sharing these insights. It’ll deepen your understanding and create a culture of excellence.

- Never Stop Learning: The coding landscape is always changing. Keep growing, exploring, and challenging yourself.

The Last Word: Unleash Your Potential (Statistical Analysis Programming Practices)

You’ve got the insights; now it’s time to make them work for you. Coding is more than just syntax and algorithms; it’s an art, a science, and a way of thinking. Embrace testing, peer review, version control, and continuous learning, and you’ll be on the path to greatness.

Happy coding, and may your journey be filled with discovery, success, and joy.